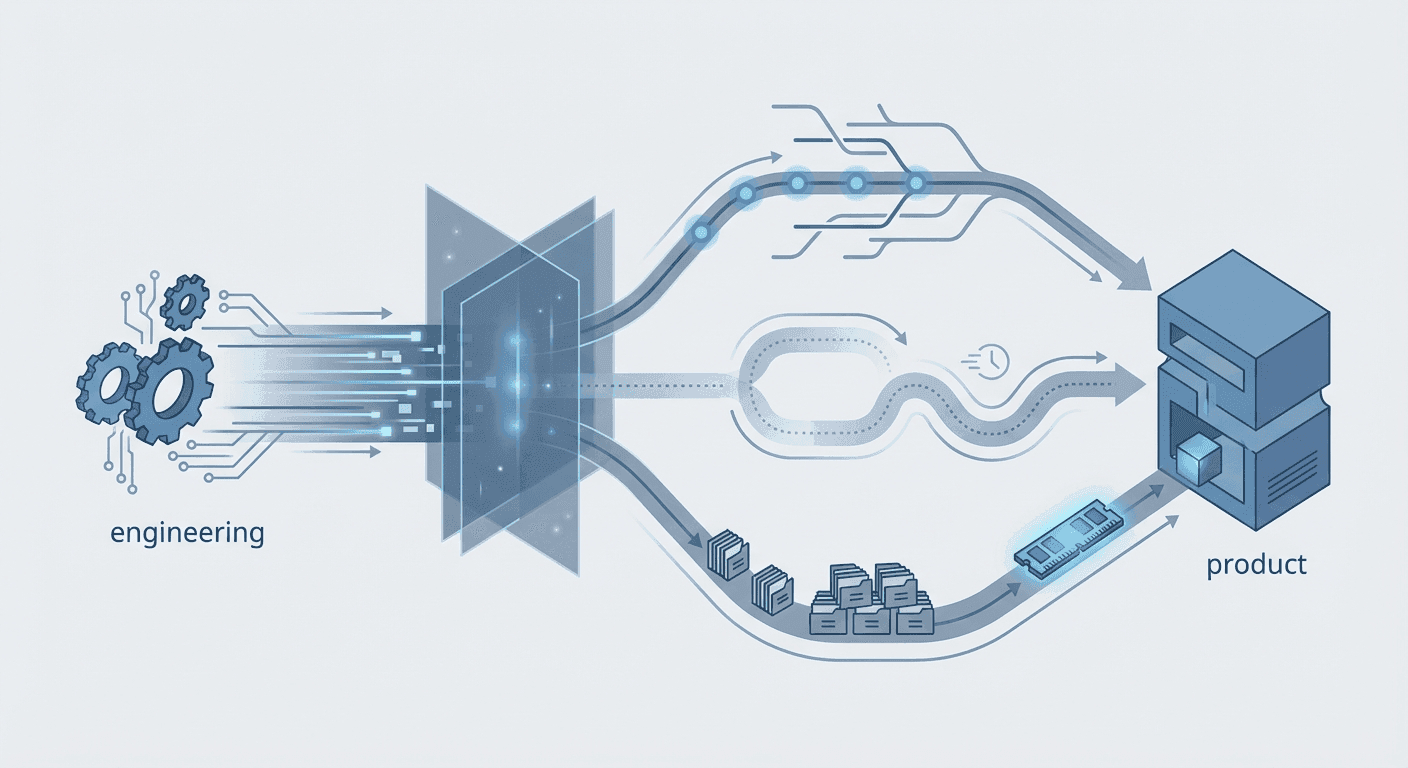

Why product's pace can't match engineering's AI leap

You've probably seen the numbers. Developers with tools like Copilot complete coding tasks 55% faster. The 2025 DORA Report shows 90% global AI adoption among developers, with over 80% reporting enhanced productivity.

Product teams aren't experiencing the same acceleration. The gap keeps widening.

This isn't about rebalancing headcount or adopting better tools. Product, design, and research face structural constraints that AI—as it exists today—can't dissolve.

Understanding these barriers is the first step toward moving differently.

Why product teams can't just "move faster"

External dependencies slow validation

Unlike code, which can be generated and tested in a controlled environment, product validation depends on the unpredictable rhythms of the real world.

A/B tests require sufficient user traffic and duration to reach statistical significance. You can't LLM your way around that. User research—even when automated—involves recruiting, scheduling, and conducting sessions. All of that introduces real-time delays.

Product validation hinges on users' availability and behavior, not just internal velocity.

Coordination creates unavoidable latency

Product development is inherently collaborative. As Conway's Law suggests, product structures often mirror team communication. More stakeholders means slower alignment.

Brooks's Law reminds us that adding product managers doesn't necessarily accelerate progress. It can increase communication overhead instead.

And approval bottlenecks—compliance, legal, brand—frequently land on product teams. These processes slow delivery without always improving quality. AI can draft a proposal quickly. Getting alignment and approvals is still a slow, human process.

AI's strengths don't match product's challenges

The "Jagged Frontier" study from Harvard and BCG offers a crucial insight: AI excels at routine tasks but can actually perform worse when applied to novel, strategic work.

Product managers can write faster. But AI can't prioritize or strategize faster—it doesn't fully grasp context or the nuanced tradeoffs involved in strategic decisions.

Risk management requires human oversight

LLM hallucinations are an unacceptable risk in regulated or sensitive contexts. In health, finance, or legal, human review isn't just preferred—it's mandatory.

This limits how quickly product teams can ship AI-assisted work. You can't use AI to bypass critical risk management.

Governance obligations persist

DORA's research consistently shows that heavy approval processes hinder performance. But product teams often become the bottleneck because they're responsible for navigating external approvals around privacy, security, and legal.

These governance obligations remain human-driven. AI hasn't touched them yet.

Engineering benefits from higher automation potential

Engineering naturally has a higher ceiling for automation gains. The Copilot study showed significant speedup for coding tasks.

But even impressive individual gains don't translate to system-level performance unless other functions improve too. The DORA 2025 report notes a "trust paradox"—AI outputs are useful but require human verification. That bottleneck remains.

How product teams can move differently

If product can't move faster, it can move smarter. The answer isn't matching engineering's pace. It's redesigning how product discovers, decides, and aligns.

Redefine velocity around learning, not shipping

Product acceleration won't come from collapsing build cycles. It'll come from compressing feedback loops.

Shift your metric from "time to release" to "time to validated insight."

AI can help by accelerating synthesis—turning user research, usage data, and market signals into clearer hypotheses faster.

Put AI where the coordination friction lives

AI shouldn't just generate PRDs or competitive summaries. It should mediate coordination friction.

Copilots can pre-draft stakeholder updates, summarize alignment threads, or identify recurring blockers in decision logs. The win isn't automating deliverables—it's orchestrating decisions.

Simulate the slow loops

You can't skip real-world validation. But you can model it.

Synthetic A/B testing, agent-based simulations, and AI-driven persona testing can de-risk ideas before live deployment. They don't replace real data—they reduce the cost of learning while waiting for it.

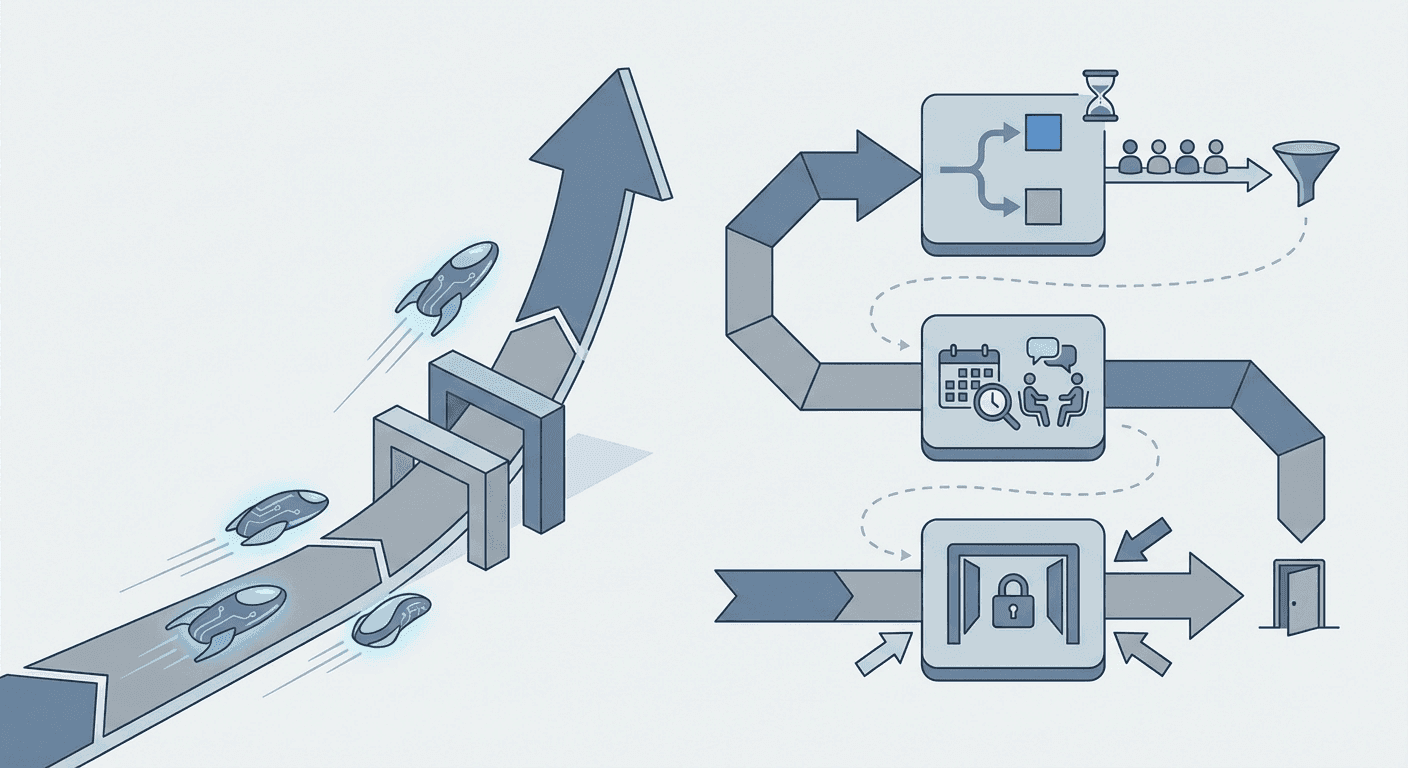

Restructure governance around risk tiers

AI adoption in product often stalls because every decision gets treated as high-risk.

A tiered governance model creates headroom for speed without sacrificing oversight. Automate and delegate low-risk validations. Escalate only the few decisions that truly require scrutiny.

Build decision memory, not just artifacts

AI excels at recall and reasoning across context. Capturing structured decision logs, assumptions, and tradeoffs lets future PMs—and AI copilots—reason from institutional memory rather than reinventing it.

This turns AI from a writing assistant into a thinking amplifier.

Design the product stack for embedded intelligence

Engineering got Copilot in their IDE. Product needs the equivalent inside Figma, Jira, and Confluence—embedded, contextual copilots where decisions actually get made.

Until then, AI remains an external assistant rather than an integrated amplifier.

Where to start

The path forward isn't matching engineering's pace. It's redesigning how product discovers, decides, and aligns.

Start by identifying which bottleneck most constrains your team's velocity. Then apply AI where coordination friction lives, not where deliverables get created.

The teams that figure this out won't just keep up with engineering. They'll change what product velocity means entirely.

Subscribe to updates

Get notified when we publish new articles.