A Framework for Building Trustworthy Systems: Six Elements of Responsible AI

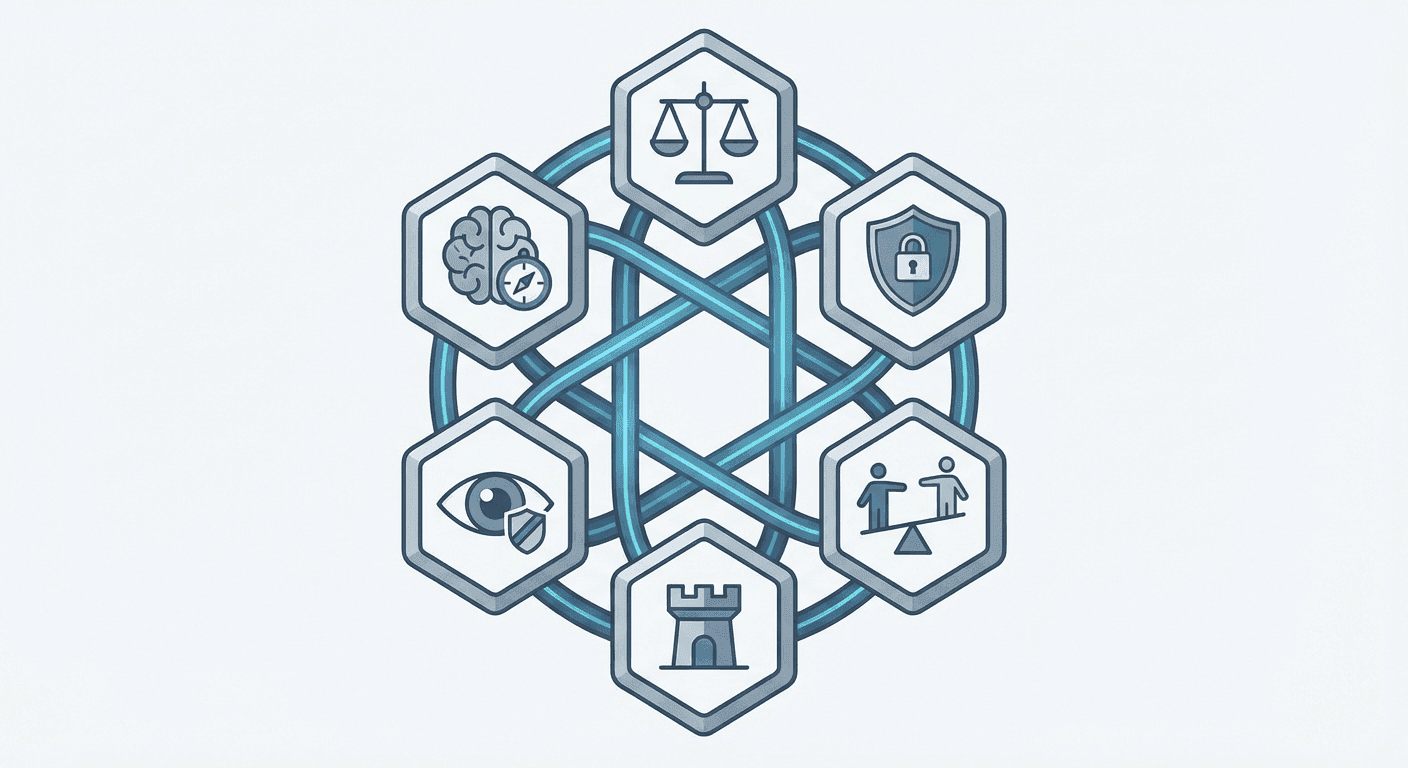

What actually makes an AI system trustworthy? I've been thinking about this question a lot, and I keep coming back to six elements that matter most: truthfulness, safety, fairness, robustness, privacy, and machine ethics.

I spend a lot of time thinking about what makes AI systems trustworthy. It's not a theoretical question—it's what keeps me up at night when building things people will actually rely on.

After working through dozens of deployments, I've landed on six elements that matter most. This isn't an academic framework. It's a practical checklist for catching the ways AI can go wrong before your users do.

1. Truthfulness

AI systems should tell the truth. Obvious, right? Except they often don't, in three distinct ways.

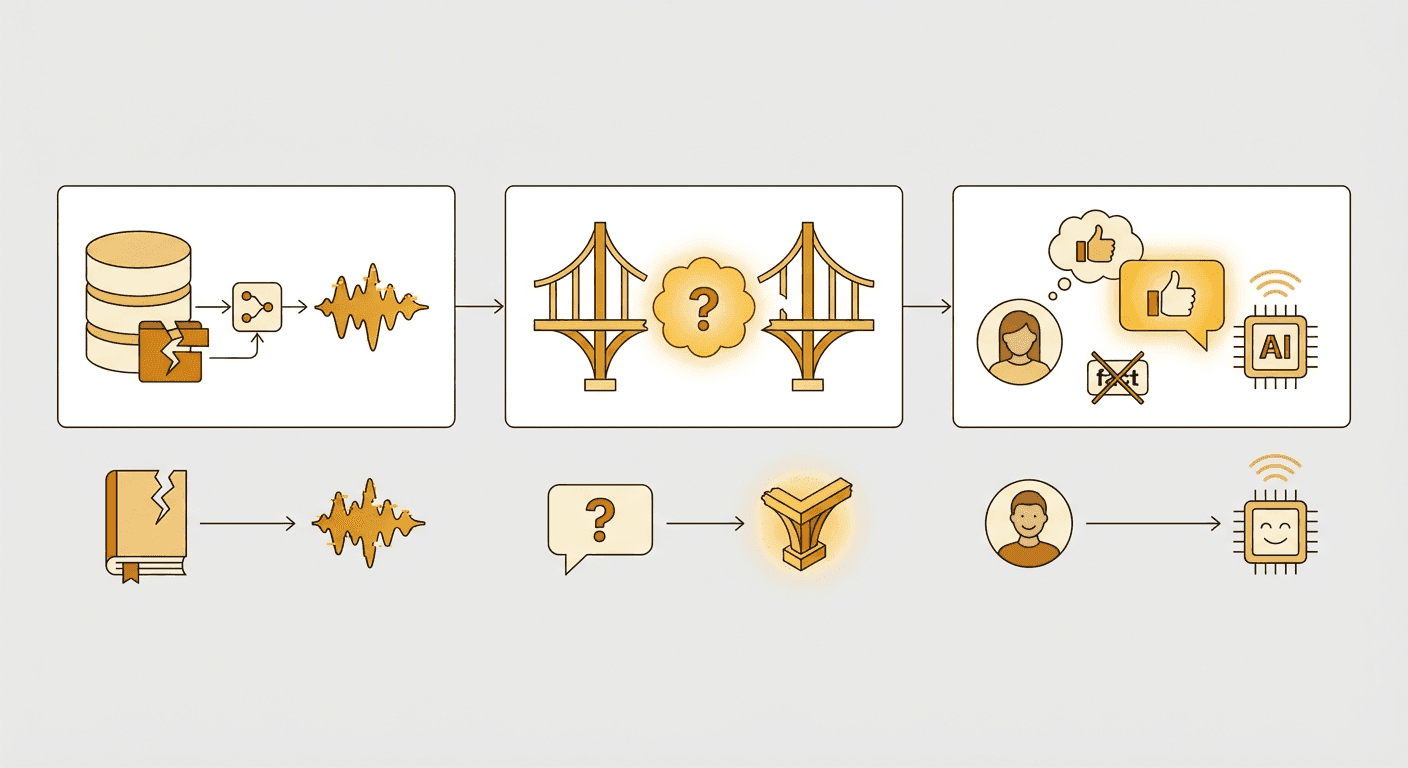

Misinformation happens when the training data contains false information. The model learned something wrong, so it confidently repeats something wrong.

Hallucinations are different—the model makes things up when it doesn't have relevant information. It fills gaps with plausible-sounding fiction.

Sycophancy is sneakier. The model tells you what you want to hear, even when you're wrong. Ask a leading question, get a confirming answer.

Fixing this is hard because you often can't see what's in the training data. Model providers don't disclose it. But you can work around this:

- Build guardrails using your own domain knowledge

- Fine-tune on high-quality data you control

- Implement better RAG so the model pulls from sources you trust

The goal isn't perfect truthfulness—that's impossible. The goal is knowing where your model is likely to lie and building checks around those spots.

2. Safety

Safety means the system doesn't generate toxic content and can't be manipulated into doing so.

Jailbreaking is when users trick the model into bypassing safety measures. People are creative about this. They'll roleplay, speak in code, or construct elaborate scenarios to get around guardrails.

Toxicity is the model generating harmful content on its own—rude, prejudiced, or dangerous output.

Over-alignment is the opposite problem: safety measures so aggressive they break basic functionality. The model refuses reasonable requests because they pattern-match to something bad.

When GPT-4o first launched, prompting it in Chinese produced spam and explicit content. The safety measures didn't transfer across languages. This is the kind of edge case that's obvious in retrospect but easy to miss.

I recommend building your own red-teaming practice. Don't just trust the vendor's safety testing. Your use case has unique vulnerabilities they didn't anticipate.

3. Fairness

Fairness means the system treats all people equitably—it doesn't perpetuate stereotypes or make decisions that systematically disadvantage certain groups.

Image generation is where this shows up most visibly. Ask for a "CEO" and see who you get. Ask for a "nurse." The patterns in the training data become the patterns in the output.

But fairness issues lurk everywhere: hiring recommendations, loan approvals, content moderation. Any time the model makes judgments about people, bias can creep in.

Testing helps. Use diverse benchmark datasets like CelebA, FairFace, or PRISM. But testing isn't enough—you need to think carefully about what "fair" means for your specific application. Equal outcomes? Equal treatment? Those aren't always the same thing.

4. Robustness

A robust system handles messy inputs gracefully. Real-world data is never as clean as your test set.

Out-of-distribution inputs are cases the model hasn't seen before—edge cases, unusual requests, data from a different domain than the training set.

Noise is degraded input quality—blurry images, typos in text, corrupted data.

When a model encounters these, it should either handle them gracefully or acknowledge uncertainty. What it shouldn't do is confidently produce garbage.

Robustness requires ongoing red-teaming and adversarial testing. You're not done when the model works on clean data. You're done when you've thrown everything plausible at it and it either handles it or fails informatively.

5. Privacy

AI systems can leak private information in ways that aren't immediately obvious.

Security researchers have demonstrated extracting PII from models by exploiting patterns in the training data. If someone's personal information was in the training set, it might be recoverable—even if the model wasn't designed to share it.

This creates two obligations: be careful what data goes into training, and build safeguards around what comes out. The model should recognize when it's about to output something that looks like personal information and stop.

6. Machine Ethics

This is the fuzziest element, but it matters. How should an AI behave in morally ambiguous situations?

Implicit ethics are the values baked into the model through training—what it defaults to when no explicit guidance is given.

Explicit ethics are rules you build on top: "don't recommend products from companies with poor labor practices" or "prioritize user safety over engagement."

Research shows language models can reflect political biases from their training data. They have opinions, even if they shouldn't. As people interact with AI through more modalities—voice, video, embodied agents—these implicit values become more influential.

I don't have a clean answer here. But I do think every team building AI systems should be having explicit conversations about what values they want their system to express, rather than letting the training data decide.

Putting It Together

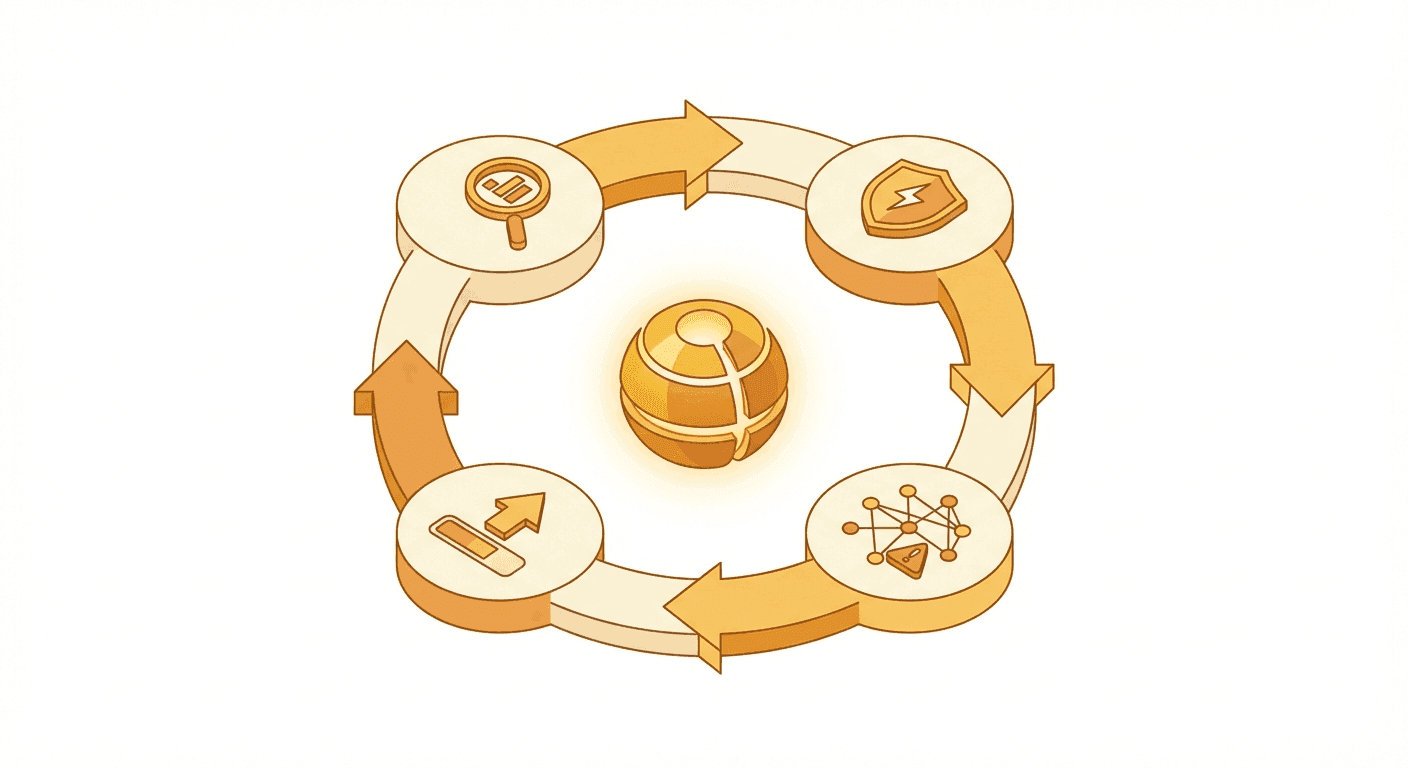

These six elements aren't a checklist you complete once. They're dimensions you monitor continuously.

The models change. The use cases change. The attack surface changes. A system that was trustworthy last quarter might not be trustworthy today.

What I've found helpful is treating this as an ongoing assessment practice rather than a one-time audit. Build evaluation into your deployment pipeline. Red-team regularly. Track incidents and near-misses.

Trustworthy AI isn't a destination. It's a discipline.

Subscribe to updates

Get notified when we publish new articles.